- 09 Jun 2025

- 5 Minutes to read

- Print

- DarkLight

- PDF

Caching in Preset

- Updated on 09 Jun 2025

- 5 Minutes to read

- Print

- DarkLight

- PDF

Overview

Preset uses a wide variety of data, some of which is readily accessible and easily accessed — this type of data can be fetched from its source whenever needed. Unfortunately, this doesn’t hold true for all sources of information. In these scenarios, data needs to be cached in order to facilitate more efficient access.

In this article, we will introduce use cases for Preset's caching capabilities, how they lead to faster access, and share the relevant backend architecture. We'll also look at how caching can be managed by the user to help improve performance.

In all scenarios, Preset uses a 24-hour Redis cache by default, with identical queries pulled from the last cache results.

Caching Chart Data on a Dashboard

Cache JSON results via Redis

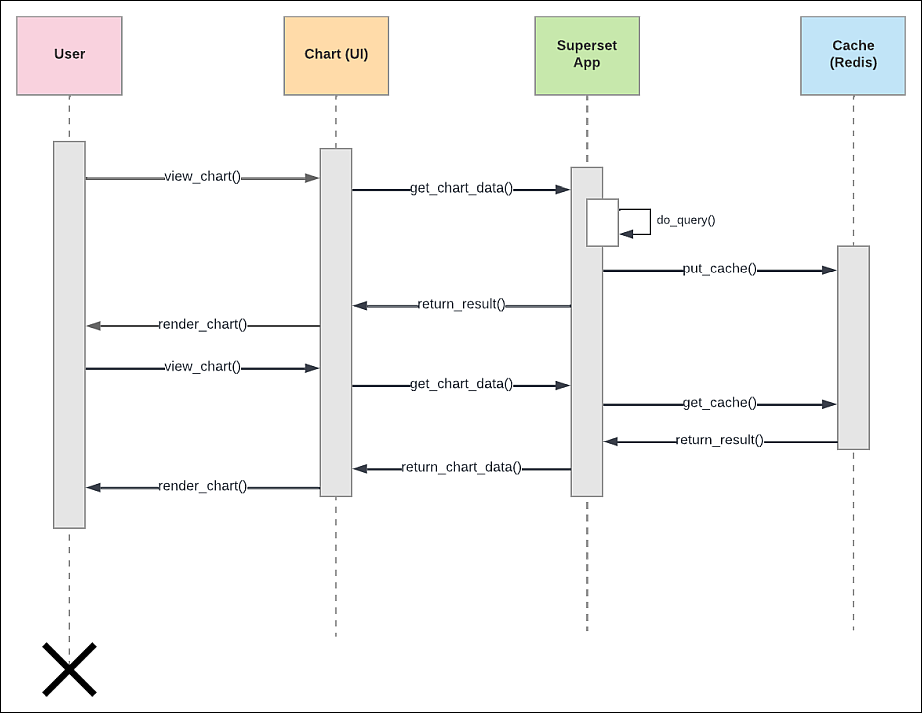

This use case serves as a speed layer between the web app and analytics databases that Preset connects to. Query results that are used to build charts are cached in order to speed up subsequent requests for the same chart. Also note that any defined filter options are also cached.

Architecture: Caching visualization data

Cache Management

To improve cache performance and reduce dashboard load times, you can pre-populate the cache by scheduling reports linked to your dashboards. In Preset, when you configure a report with the "Ignore cache" option enabled, it triggers a fresh query that updates the cache with the latest data. As a result, when users later open the dashboard, they benefit from faster load times and up-to-date information without triggering new queries against the database. This practice is often referred to as “warming up the cache.”

One important note is that cached data is specific to the filter combination that is applied to the dashboard or chart. A change to a filter modifies the underlying SQL, so filter changes will still trigger a request to the database.

Additionally, while caching can really accelerate dashboard load times and protect your database from significant common workloads, it doesn’t really improve interactive workflows like exploring data on theChart Builder page or applying filters to a dashboard.

Caching with SQL Lab Queries

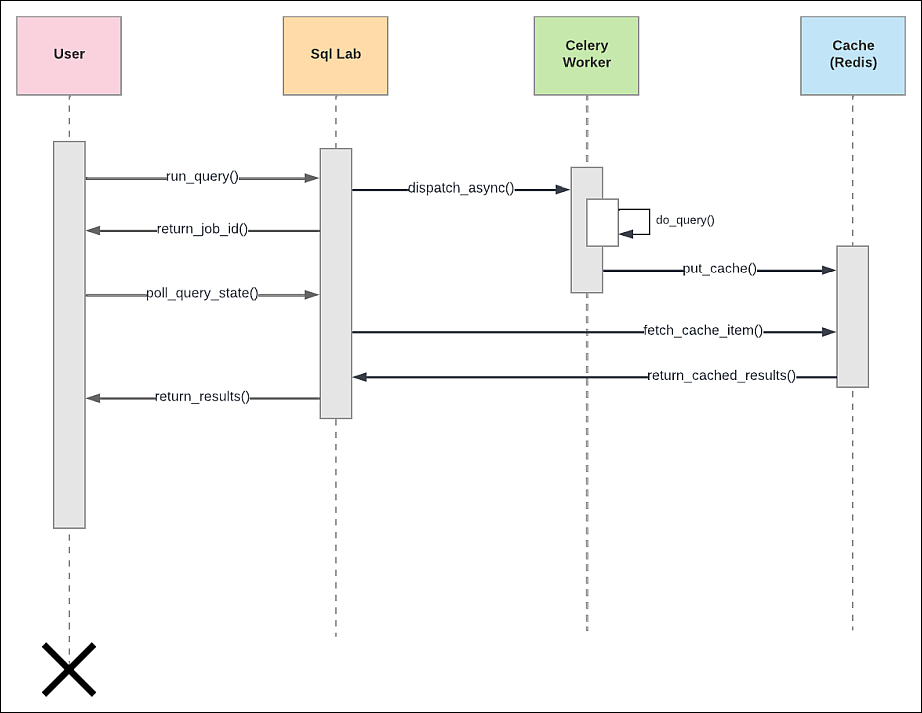

Using asynchronous mode for SQL Lab queries

When a database has Asynchronous query execution enabled, SQL Lab queries will be dispatched to Celery workers, which then writes results to a shared cache. This results in improved performance, as it enables web app instances to quickly retrieve data for display to a Preset user.

If the Asynchronous query execution is not enabled, then the SQL query will run as a web request instead of being dispatched to a Celery worker.

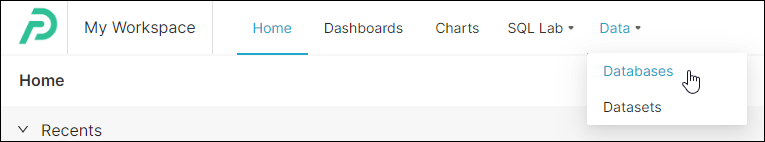

How to enable asynchronous query execution

By default, asynchronous query is disabled. In order to take advantage of the shared cache method described above, you'll need to enable asynchronous query in a database.

- Navigate to the Databases screen.

- Select the pencil Edit icon for a database.

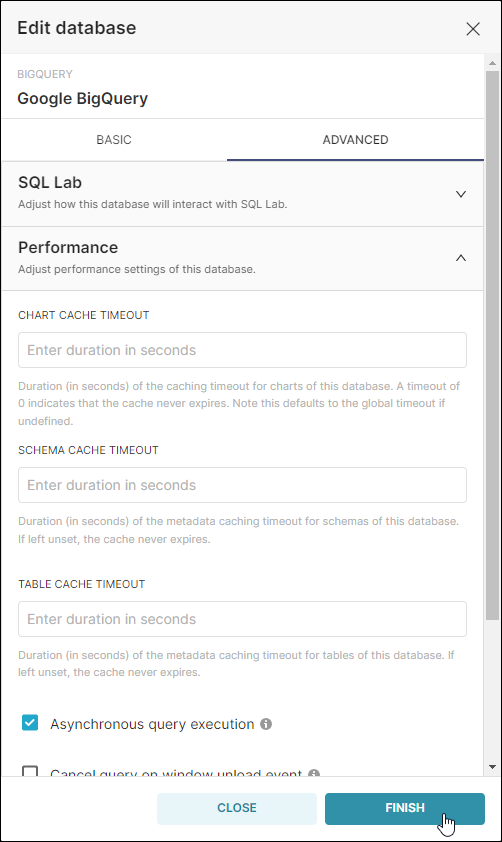

- Select the Advanced tab and then expand the Performance section.

- Select Asynchronous query execution to enable.

- When done, select Finish.

Architecture: Caching with SQL Lab queries

Caching Other Elements

Caching various "memoized" functions

There are several other caching opportunities used by the Preset codebase that are designed to speed up repetitive tasks performed against the platform's metadata store. Examples include the fetching of databases for a given user, parsing column types for a given analytics database, and fetching table names for SQL Lab.

Cache dashboard thumbnails

As part of the dashboard thumbnail system, content is cached in order to reduce the amount of time spent fetching data from the S3 bucket in which the source image resides.

Setting Cache Timeouts

A cache timeout is the duration, in seconds, of how long data from a table, schema, chart, or dataset will remain accessible in the Preset cache. All of these values can be defined, with the default being the globally set timeout. If set to 0, then the cache will never expire.

The globally set cache timeout determines the default duration (in seconds) for which data remains valid in the cache if no specific timeout is configured at the table, schema, chart, or dataset level.

In Preset this global timeout is defined in the backend configuration. This setting is applied across the application unless overridden at a more granular level, such as directly on a chart or dataset. Heads up that users can't modify this global set timeout as it's a fixed value across all Preset workspaces. Currently, the global backend value set for managed Preset environments is a day (24h): '60 * 60 * 24'

While you may not have direct access to change this global value, you can always set custom cache timeouts at specific levels:

- Database Level: You can set a specific cache timeout for an entire database.

- Dataset Level: Individual datasets can have their own cache timeout settings.

- Chart Level: Specific charts can have customized cache timeouts.

So in a nutshell, you can set the cache using any of those granular options but not the global one.

If you wish to define cache timeouts, then a major consideration is how frequently the user's data changes. Infrequently-changed elements, like schemas, may be defined with a 4-week long cache timeout; conversely, a chart that uses real-time data may need to be set to as low as 5 minutes.

Let's have a closer look at some potential cache timeout settings, in order from longest to shortest duration.

Schema

In general, schemas do not change very often, so a longer cache timeout is appropriate.

Recommended cache timeout: 1 to 4 weeks (604,800 to 2,419,200 seconds)

Dataset

Like schemas, changes for datasets are less frequent. Columns are rarely altered (i.e., added or removed) and types rarely change.

Recommended cache timeout: 1 day to 1 week (86,400 to 604,800 seconds)

Table

Generally speaking, table caches should be shorter. If your users frequently create their own tables, then a smaller value is recommended to ensure that they appear in drop-downs. It's also important to consider the database being used; Apache Druid, for example, creating a table takes time and planning, so a longer cache is more appropriate.

Recommended cache timeout: 1 hour to 1 week (3,600 to 604,800 seconds)

Chart

The shortest cache duration should be reserved for charts, which may need to access more recent data, particularly if real time critical data is involved.

Recommended cache timeout: 5 minutes to 1 day (300 to 86,400 seconds)

How to Define Cache Timeouts

Define cache for schemas, tables, and charts

Follow the navigation directions above for enabling asynchronous query execution and, in the Performance section, enter cache timeout values for the Chart Cache Timeout, Schema Cache Timeout, and Table Cache Timeout fields, as needed.

Define cache for datasets

- Navigate to the Datasets screen.

- Select the pencil Edit icon for a dataset.

- Select the Settings tab.

- In the Cache Timeout field, enter a value.

- When done, select Save.

Per-user Caching

Preset offers Per-user Caching on the Enterprise Plan that for teams with more sensitive data policy or have external platforms that manage data access. Preset's default cache setting assumes that some users can have access to the same information on dashboard as the user who first viewed the dashboard. With Per-user Caching, Preset caches the data that is generated on a user-by-user basis. Each user would have their own set of cached metadata, so Preset will only show the cached version of a dashboard or a chart if the user had previously visited the same dashboard or chart within the last 24 hours (default settings).